How To Remove The Previous Update In Centos7

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Slurm

Slurm is a highly configurable open source workload manager. See the Slurm project site for an overview.

Slurm can easily exist enabled on a CycleCloud cluster by modifying the "run_list" in the configuration section of your cluster definition. The 2 basic components of a Slurm cluster are the 'master' (or 'scheduler') node which provides a shared filesystem on which the Slurm software runs, and the 'execute' nodes which are the hosts that mount the shared filesystem and execute the jobs submitted. For example, a simple cluster template snippet may look similar:

[cluster custom-slurm] [[node master]] ImageName = cycle.image.centos7 MachineType = Standard_A4 # 8 cores [[[cluster-init cyclecloud/slurm:default]]] [[[cluster-init cyclecloud/slurm:principal]]] [[[configuration]]] run_list = role[slurm_master_role] [[nodearray execute]] ImageName = wheel.image.centos7 MachineType = Standard_A1 # one core [[[cluster-init cyclecloud/slurm:default]]] [[[cluster-init cyclecloud/slurm:execute]]] [[[configuration]]] run_list = role[slurm_master_role] slurm.autoscale = true # Set to true if nodes are used for tightly-coupled multi-node jobs slurm.hpc = true slurm.default_partition = true Slurm can easily be enabled on a CycleCloud cluster by modifying the "run_list" in the configuration section of your cluster definition. The two basic components of a Slurm cluster are the 'scheduler' node which provides a shared filesystem on which the Slurm software runs, and the 'execute' nodes which are the hosts that mountain the shared filesystem and execute the jobs submitted. For example, a simple cluster template snippet may look like:

[cluster custom-slurm] [[node scheduler]] ImageName = bike.paradigm.centos7 MachineType = Standard_A4 # 8 cores [[[cluster-init cyclecloud/slurm:default]]] [[[cluster-init cyclecloud/slurm:scheduler]]] [[[configuration]]] run_list = office[slurm_scheduler_role] [[nodearray execute]] ImageName = bicycle.epitome.centos7 MachineType = Standard_A1 # 1 core [[[cluster-init cyclecloud/slurm:default]]] [[[cluster-init cyclecloud/slurm:execute]]] [[[configuration]]] run_list = office[slurm_scheduler_role] slurm.autoscale = true # Set to truthful if nodes are used for tightly-coupled multi-node jobs slurm.hpc = true slurm.default_partition = true ` Editing Existing Slurm Clusters

Slurm clusters running in CycleCloud versions seven.8 and after implement an updated version of the autoscaling APIs that allows the clusters to utilize multiple nodearrays and partitions. To facilitate this functionality in Slurm, CycleCloud pre-populates the execute nodes in the cluster. Because of this, you need to run a command on the Slurm scheduler node later on making any changes to the cluster, such as autoscale limits or VM types.

Making Cluster Changes

The Slurm cluster deployed in CycleCloud contains a script that facilitates this. Afterwards making any changes to the cluster, run the following control as root (e.g., by running sudo -i) on the Slurm scheduler node to rebuild the slurm.conf and update the nodes in the cluster:

cd /opt/cycle/slurm ./cyclecloud_slurm.sh scale Annotation

For CycleCloud versions < 7.9.10 the script is located under /opt/cycle/jetpack/system/bootstrap/slurm

Note

For CycleCloud versions > 8.2 the script is located under /opt/bike/slurm

Removing all execute nodes

As all the Slurm compute nodes accept to be pre-created, it's required that all nodes in a cluster be completely removed when making big changes (such equally VM type or Image). It is possible to remove all nodes via the UI, but the cyclecloud_slurm.sh script has a remove_nodes option that will remove any nodes that aren't currently running jobs.

Creating additional partitions

The default template that ships with Azure CycleCloud has 2 partitions (hpc and htc), and you lot can define custom nodearrays that map directly to Slurm partitions. For example, to create a GPU partition, add the following department to your cluster template:

[[nodearray gpu]] MachineType = $GPUMachineType ImageName = $GPUImageName MaxCoreCount = $MaxGPUExecuteCoreCount Interruptible = $GPUUseLowPrio AdditionalClusterInitSpecs = $ExecuteClusterInitSpecs [[[configuration]]] slurm.autoscale = true # Ready to true if nodes are used for tightly-coupled multi-node jobs slurm.hpc = false [[[cluster-init cyclecloud/slurm:execute:ii.0.1]]] [[[network-interface eth0]]] AssociatePublicIpAddress = $ExecuteNodesPublic Memory settings

CycleCloud automatically sets the corporeality of available retentiveness for Slurm to use for scheduling purposes. Considering the amount of available memory can change slightly due to different Linux kernel options, and the Bone and VM tin use upwardly a pocket-size amount of retention that would otherwise be bachelor for jobs, CycleCloud automatically reduces the corporeality of retentiveness in the Slurm configuration. Past default, CycleCloud holds back 5% of the reported available memory in a VM, but this value tin can exist overridden in the cluster template past setting slurm.dampen_memory to the pct of retention to hold back. For example, to hold dorsum 20% of a VM's memory:

slurm.dampen_memory=twenty Disabling autoscale for specific nodes or partitions

While the born CycleCloud "KeepAlive" characteristic does not currently piece of work for Slurm clusters, information technology is possible to disable autoscale for a running Slurm cluster by editing the slurm.conf file directly. You can exclude either individual nodes or entire partitions from being autoscaled.

Excluding a node

To exclude a node or multiple nodes from autoscale, add together SuspendExcNodes=<listofnodes> to the Slurm configuration file. For example, to exclude nodes ane and 2 from the hpc partition, add the following to /etc/slurm/slurm.conf:

SuspendExcNodes=hpc-pg0-[one-2] Then restart the slurmctld service for the new configuration to take result.

Excluding a partition

Excluding entire partitions from autoscale is similar to excluding nodes. To exclude the entire hpc division, add the following to /etc/slurm/slurm.conf

SuspendExcParts=hpc And so restart the slurmctld service.

Troubleshooting

UID conflicts for Slurm and Munge users

By default, this project uses a UID and GID of 11100 for the Slurm user and 11101 for the Munge user. If this causes a conflict with another user or group, these defaults may be overridden.

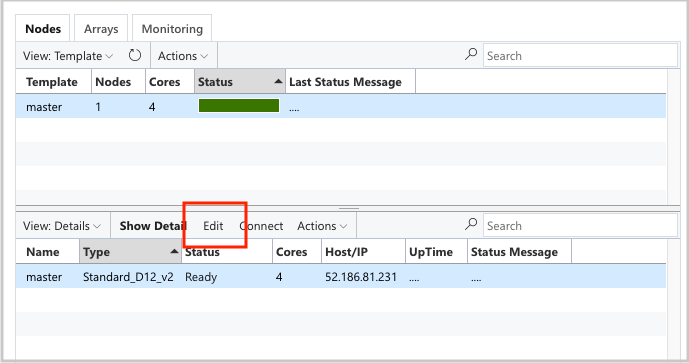

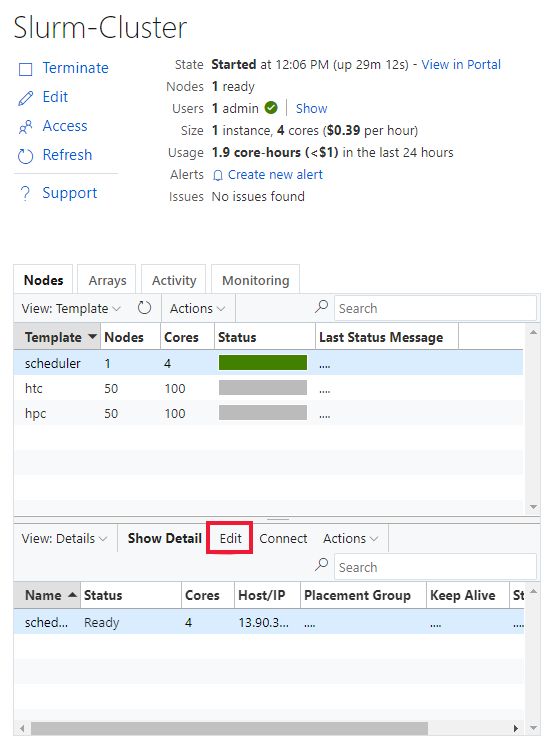

To override the UID and GID, click the edit push button for both the scheduler node:

And the execute nodearray:

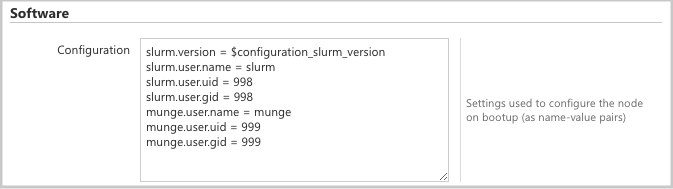

and add the post-obit attributes to the Configuration section:

slurm.user.proper name = slurm slurm.user.uid = 11100 slurm.user.gid = 11100 munge.user.name = munge munge.user.uid = 11101 munge.user.gid = 11101 Autoscale

CycleCloud uses Slurm's Elastic Computing feature. To debug autoscale issues, there are a few logs on the scheduler node you can check. The first is making sure that the ability save resume calls are being made by checking /var/log/slurmctld/slurmctld.log. You should encounter lines like:

[2019-12-09T21:xix:03.400] power_save: pid 8629 waking nodes htc-1 The other log to bank check is /var/log/slurmctld/resume.log. If the resume footstep is failing, in that location will also be a /var/log/slurmctld/resume_fail.log. If there are letters about unknown or invalid node names, make sure you lot haven't added nodes to the cluster without following the steps in the "Making Cluster Changes" section above.

Slurm Configuration Reference

The post-obit are the Slurm specific configuration options you can toggle to customize functionality:

| Slurm Specific Configuration Options | Description |

|---|---|

| slurm.version | Default: 'eighteen.08.7-1'. This is the Slurm version to install and run. This is currently the default and but option. In the time to come boosted versions of the Slurm software may be supported. |

| slurm.autoscale | Default: 'faux'. This is a per-nodearray setting that controls whether Slurm should automatically stop and start nodes in this nodearray. |

| slurm.hpc | Default: 'true'. This is a per-nodearray setting that controls whether nodes in the nodearray will be placed in the aforementioned placement group. Primarily used for nodearrays using VM families with InfiniBand. Information technology only applies when slurm.autoscale is ready to 'true'. |

| slurm.default_partition | Default: 'false'. This is a per-nodearray setting that controls whether the nodearray should be the default sectionalisation for jobs that don't request a division explicitly. |

| slurm.dampen_memory | Default: 'v'. The percentage of retentiveness to concur back for Bone/VM overhead. |

| slurm.suspend_timeout | Default: '600'. The amount of time (in seconds) between a append call and when that node tin exist used once again. |

| slurm.resume_timeout | Default: '1800'. The amount of time (in seconds) to expect for a node to successfully kicking. |

| slurm.install | Default: 'true'. Determines if Slurm is installed at node kick ('truthful'). If Slurm is installed in a custom image this should be set to 'false'. (proj version ii.5.0+) |

| slurm.use_pcpu | Default: 'truthful'. This is a per-nodearray setting to control scheduling with hyperthreaded vcpus. Ready to 'fake' to set CPUs=vcpus in cyclecloud.conf. |

| slurm.user.name | Default: 'slurm'. This is the username for the Slurm service to utilize. |

| slurm.user.uid | Default: '11100'. The User ID to use for the Slurm user. |

| slurm.user.gid | Default: '11100'. The Group ID to use for the Slurm user. |

| munge.user.name | Default: 'munge'. This is the username for the MUNGE authentication service to utilise. |

| munge.user.uid | Default: '11101'. The User ID to use for the MUNGE user. |

| munge.user.gid | Default: '11101'. The Group ID to use for the MUNGE user. |

CycleCloud supports a standard set of autostop attributes across schedulers:

| Aspect | Description |

|---|---|

| cyclecloud.cluster.autoscale.stop_enabled | Is autostop enabled on this node? [true/false] |

| cyclecloud.cluster.autoscale.idle_time_after_jobs | The corporeality of time (in seconds) for a node to sit down idle later completing jobs earlier it is scaled downward. |

| cyclecloud.cluster.autoscale.idle_time_before_jobs | The amount of fourth dimension (in seconds) for a node to sit idle earlier completing jobs before information technology is scaled down. |

How To Remove The Previous Update In Centos7,

Source: https://docs.microsoft.com/en-us/azure/cyclecloud/slurm

Posted by: nelsonvoked1938.blogspot.com

0 Response to "How To Remove The Previous Update In Centos7"

Post a Comment